Understanding the Evolution of Gpt: From Encoder Decoder to …

Dec 28, 2023 · The encoder can be any type of recurrent neural network (RNN), such as LSTM or GRU. The decoder, on the other hand, takes the context vector produced by the encoder and generates an output...

Understanding Encoder And Decoder LLMs - Sebastian Raschka, …

Jun 17, 2023 · Delve into Transformer architectures: from the original encoder-decoder structure, to BERT & RoBERTa encoder-only models, to the GPT series focused on decoding. Explore their evolution, strengths, & applications in NLP tasks.

Understanding Encoder-Decoder Mechanisms – EvoAI

In this comprehensive guide (covered in two articles), we explore the intricate world of encoder-decoder mechanisms and their transformative impact on artificial intelligence (AI).

The Evolution of Transformer Models in Artificial Intelligence

In this section, we explore the implementations of encoder-decoder models in standard deep learning frameworks and highlight the contributions of the Hugging Face’s Transformers library in making transformer-based architectures, including …

The LLM Evolutionary Tree - tokes compare

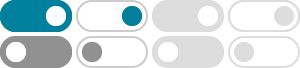

Understanding the differences between the three types of models (encoder, decoder and encoder-decoder models) is crucial for selecting the right model for the task, fine-tuning models for specific applications, managing computational …

The Evolution of Seq2Seq Models: From Encoder-Decoder to

Aug 27, 2024 · In this blog, we’ll trace the evolution of seq2seq models and highlight the key innovations that led to the Transformer architecture. Encoder-Decoder architecture:- The seq2seq model consists...

Exploring the Evolution of Transformers - DZone

Jul 25, 2024 · Encoder-decoder architecture: Separates the processing of input and output sequences, enabling more efficient and effective sequence-to-sequence learning. These elements combine to create a...

Evolution of NLP: From Encoder-Decoder Architectures to

Oct 11, 2024 · The journey of deep learning in natural language processing (NLP) has seen remarkable advancements, evolving from traditional RNN-based encoder-decoder models to attention-based...

Understanding the Evolution of Gpt: From Encoder Decoder to …

Dec 28, 2023 · The Seq2Seq model, short for Sequence-to-Sequence model, is a type of neural network architecture that is used for tasks involving sequential data. It consists of two recurrent neural networks...

Understanding Transformer Models Architecture and Core …

Sep 28, 2024 · Since its inception, the transformer model has been a game-changer in natural language processing (NLP). It appears in three variants: encoder-decoder, encoder-only, and decoder-only. The original model was the encoder-decoder form, which provides a comprehensive view of its foundational design.

- Some results have been removed